Splunk HF - Advanced Data Routing & Cloning

Background

Nowadays, as we are integrating systems in-order to get data for Analytical purposes, Splunk plays a great & huge role for gathering such machine data and avail it for more robust wide range of Analytical capabilities on top the platform.And for getting the data in the form that will ease the Data Analysis and decrease the load on Splunk, Cribl provide that all in near real time as processing & transforming the data on the wire.

And having such needs nowadays we have to have that ability and agility in how to perform the data routing between different streaming agents within the approved networking in our all Environments.

Problem

POC Definition

The POC requires to be able to enable streaming data routing and cloning using Splunk HF Agent to MidTier Layers either another Splunk HF or Cribl.

Within the POC we will be performing multiple scenarios that will include the below:

- Simple data routing use-case

- Simple data cloning use-case

- Advance data routing/cloning use-case

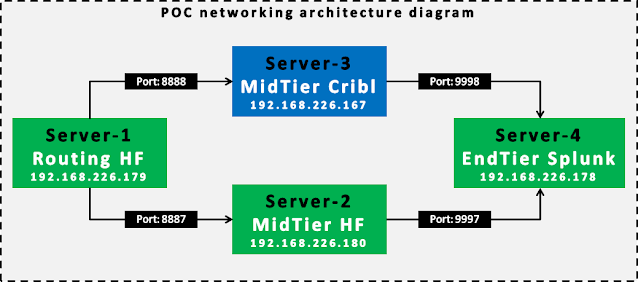

The environment components used to perform the POC are as follows:

- Server-1 → Routing HF: Is used to monitor a data on different indexes and sourcetype levels and do the routing/cloning logic.

- Server-2 → MidTier HF: Is used to Forward the data received from Routing HF to the EndTier Splunk Layer.

- Server-3 → MidTier Cribl: Is used to Forward the data received from Routing HF to the EndTier Splunk Layer.

- Server-4 → EndTier Splunk: Is a standalone Splunk instance that used to receive the data sent by the MidTier, index it and avail it for searching.

Below diagram will show more about the environment networking architecture diagram:

Scenarios

Scenario-1:

Referencing the below diagram, Scenario-1 Simple Routing requires to route ( CUT ) index [ idx_3 ] from MidTier HF to MidTier Cribl using the Routing HF.

Scenario-2:

Referencing the below diagram, Scenario-2 Simple Cloning requires to clone index [ idx_3 ] from MidTier HF to as well MidTier Cribl using the Routing HF.

Scenario-3:

Referencing the below diagram, Scenario-3 Advanced Routing/Cloning requires to either route or clone index [ idx_1 ] from MidTier HF to MidTier Cribl using the Routing HF taking into consideration that idx_1 index data is being sent with sourcetype [ st_idx_a ] which is the same for index [ idx_2 ] as well. Since, indexes [ idx_1 & idx_2 ] have the same sourcetype [ st_idx_a ] that will require additional level of filtration while routing the data.

Extreme Cases:

- Subset of the index name matches multiple other indexes

- Want to Route multiple indexes not only one.

Configuration

Routing Server

Routing HF Layer is the initial Layer of getting data-in and responsible on routing/cloning data to MidTier Cribl/HF Layer.

As based-on Scenario objective to Route/Clone idx-3 or idx-1 data to both MidTier HF & MidTier Cribl.

To perform such we will have routing rules specified in props.conf & transforms.conf based on the inputs & outputs specified.

Simple Routing Rules mainly identified by the <sourcetype>, <source> or <host> within the props.conf as below selected the data comes in with sourcetype [st_idx_a]. Stanza properties:

- TRANSFORMS-routing property to map to the routing rule in transforms.conf. transforms.conf stanza will hold the same name of props.conf TRANSFORMS-routing property value.

transforms.conf stanza contains multiple properties as:

- SOURCE_KEY property aims to specify the regex filtration field.

- REGEX: to specify an extra layer of filtration on the data coming on the specified sourcetype (in our case),

- DEST_KEY to specify either the data are going on TCP protocol or SYSLOG Protocol in our case we require TCP so the value is _TCP_ROUTING

- Format property that specifies the output group configured in the outputs.conf so as we require cloning the data between the MidTier HF/Cribl then the format property value will be both output groups separated by comma as follows [ routing-cribl,default-autolb-group ]

inputs.conf

As Routing HF is the first layer, so it has to monitor some files in order to perform the data streaming as if getting data form customer sources.

Below we are configuring three sources pushing data to three different indexes having common/different sourcetype names, as index_1 & index_2 have common sourcetype name [ st_idx_a ] and index_3 has separate sourcetype [ st_idx_b ].

props.conf

As described props.conf aims to filter out the data by sourcetype, source or host and to specify the transforms-routing property.

Scenario < 1 > & < 2 >

Scenario < 3 >

transforms.conf

For performing the scenarios required for < 1 >, < 2 > & < 3 > we will be manipulating the SOURCE_KEY & FORMAT Properties Values.

Scenario < 1 >

As the scenario requires to route the index_3 data to MidTier Cribl we will specify FORMAT to point-out to output.conf output group [ routing-cribl ] that point to the Cribl destination.

Scenario < 2 >

As the scenario requires to clone the index_3 data to MidTier Cribl as well routing it to MidTier HF. We will specify FORMAT to point-out to both output.conf output-groups [ routing-cribl,default-autolb-group ] that point to the MidTier Cribl/HF destination.

Scenario < 3 >

As Scenario-3 requires to either route/clone [ index_1 ] taking into consideration that the specified sourcetype in props.conf [ st_idx_a ] holds both [ index_1 & index_2 ]. Therefore we need to specify the SOURCE_KEY as [ _MetaData:Index ] to be able to have an extra routing/cloning specification by Index through the REGEX property by specifying it to [ index_1 ].

By that the transforms.conf virtually will receive data that holds sourcetype [ st_idx_a ] from props.conf specification. And since there is two indexes [ index_1 & index_2 ] sending data having the same sourcetype, transforms.conf by specifing SOURCE_KEY & REGEX will apply the routing/cloning rules only to REGEX Value [ index_1 ].

Finally, and as applied in Scenario < 1 > & < 2 >, for routing & cloning we need to specify the Format Property either including only one output-group for routing or multiple output-group for cloning. For instance we will be routing the data only through Cribl output-group.

Extreme Cases Handling:

Subset of the index name matches multiple other indexes

If we want to route an index called [ idx_1 ] & there is another index called [ idx_1_1 ]. Using Regex parameter value as [ idx_1 ] that will match the value and route both indexes while we need [ idx_1 ] only. Therefore the right pattern for the Regex field is [ idx_1$ ] to match exactly the required index only.

- Want to Route multiple indexes not only one.

To match multiple index for routing/cloning you can re-use this in the Regex Parameter Value as follows [ idx_1$|idx_2$ ].

- We specified [ idx_1$ ] to get the exact match for idx_1 using [ $ ] as well as [ idx_2$ ]

- We Specified pipe [ | ] as Or Condition to match events have either exact [ idx_1$ ] or [ idx_2$ ]

outputs.conf

As previously mentioned by the Scenarios, we do have two destinations MidTier HF & MidTier Cribl. Under [tcpout] stanza we defined the defaulGroup to be called [default-autolb-group]. And then defined the [tcpout:default-autolb-group] that refers to MidTier HF IP:PORT.

And defined another group for MidTier Cribl called [tcpout:routing-cribl] having as well the IP:PORT

MidTier HF

MidTier HF aims to forward in-coming data from Routing HF to the EndTier Splunk as below has configured the following:

inputs.conf

Inputs.conf has configured to get data through SplunkTCP port 8887 which the port enabled to receive data from the Routing HF.

outputs.conf

As mentioned that MidTier will forward the in-coming traffic to EndTier, output.conf reflects such configuration by configuring the tcpout to EndTier Splunk on server 192.168.226.178 on port 9997

MidTier Cribl

Source Configuration:

Source SplunkTCP has been configured to receive data through port 8888 coming from any address on 0.0.0.0

Route Configuration:

Then a Route has been configured to filter data through source splunk:SPLUNK_72_HF. And pass the data to pipeline called Splunk-Route, Then Destination called Splunk:Splunk

Pipeline Configuration:

Pipeline aim is to pass through the data by just adding a new field called cribl_flag with vaslue FromCribl to tag the events that is coming out of Cribl to be more easier to find in Splunk at EndTier.

Destination Configuration:

Finally, The destination it has been configured to send data to EndTier Splunk on 192.168.226.178 on Port 9998.

EndTier Splunk

EndTier is where the data will be indexed, in the current POC its a standalone server in other cases it may be the indexers layer servers. Below is the configuration used for the standalone server.

inputs.conf

Above configuration is to enable receiving data from the mentioned ports which reflects the connection from MidTier HF & MidTier Cribl Layer.

Please comment down for any more details, discussions and recommendations.

I would like to hear from you back related to next topics you recommend to present it to you.

Really interesting 👌

ReplyDelete